An SEO audit is a thorough review of an existing website from a search engine optimization (SEO) perspective. It is used to uncover potential problems and opportunities for optimization. The SEO audit can also be used as a checklist when developing a new website. This will ensure that all important aspects are considered from the start.

Several areas of search engine optimization are interrelated. These include technical SEO, on-page SEO, content SEO, off-page SEO, website performance, and more. An SEO audit takes all these sub-areas into account, although in this article we will focus on the technical part, i.e. WordPress and Google optimization.

Our recommendation for an SEO audit is divided into "editorial SEO" and "technical SEO". This helps with a more practical approach, as often different people or departments are responsible for the content and technical aspects of a website.

If you run a WordPress website, you should perform SEO audits on a regular basis to identify optimization potential in time.

The topic of performance audits is a bit more extensive, so we will write a separate article about it.

Tools & Resources

SEO-Plugins for WordPress

A good SEO plugin for WordPress is essential for search engine optimization unless you develop certain features yourself. There are both free and paid versions available.

Key features of SEO plugins include XML sitemap creation, metadata customization, and preview, structured data support, redirect management, and readability and link analysis.

SEO plugins are usually well-documented and accessible to both administrators and authors. When we talk about SEO plugins below, we are referring to this type of plugin.

Among the most popular SEO plugins are:

In any case, it is important to carefully compare the features and prices of SEO plugins before making a decision.

Monitoring and reporting tools for SEO

SaaS SEO monitoring and reporting platforms allow website owners to monitor their SEO performance by displaying keyword rankings, traffic data, and other important metrics. They also allow you to automate SEO audits or real-time monitoring. You can view, compare, and analyze data to optimize your SEO strategy and get better results.

The big downside of these all-in-one platforms is: They are often very expensive, which can be a hurdle for small and medium-sized projects. In addition, the functionality of these platforms is often oversized and not suitable for every project.

Free SEO Audit Tools

Besides expensive SEO platforms, there are free tools that can help you with an SEO audit. In this article, we will introduce some of them.

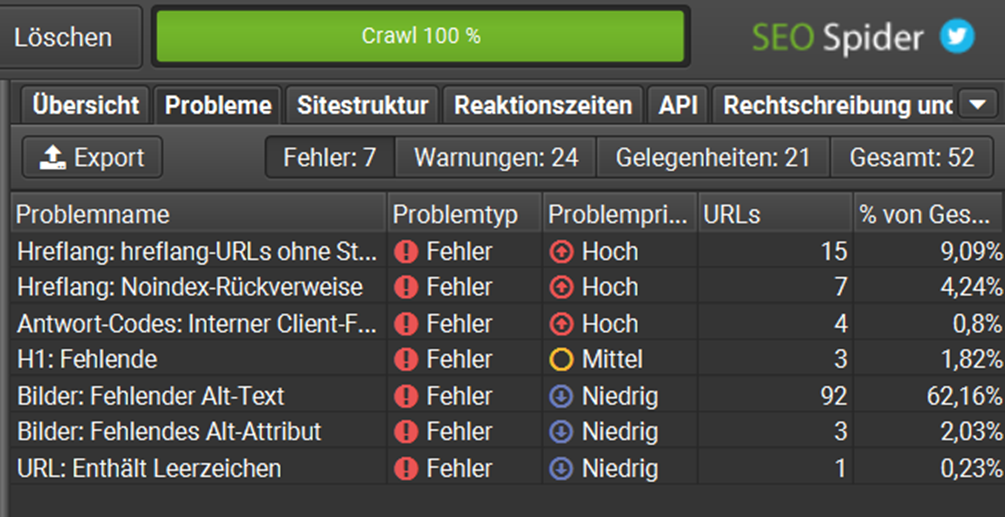

- SEO Spider by Screaming Frog in the free version to automate steps of the audit and create overviews. SEO Spider is well-documented with guides and tutorials.

- Google Search Console and PageSpeed Insights help evaluate website performance and provide useful SEO tips about the project from the perspective of the world's largest search engine.

- Other free tools allow you to validate structured data, SSL/TLS certificates, and security headers. Read more about this in the rest of this article.

Useful resources for an SEO audit

With each change in the Google algorithm, best practices and strategies evolve. At the same time, many myths about SEO persist. This is due to the lack of transparency on the part of search engine operators.

Reliable statements from Google and other search engines are rare and often vague. This makes long-term planning of SEO strategies difficult. To stay up to date, it is important to use trustworthy sources of information. SEO blogs and newsletters from experts, as well as documentation and guides, are good sources of up-to-date and reliable information.

Here is some useful documentation that Google provides on the topic of SEO:

Google SEO Basics

- List of Google Ranking News

- Reports and tools from Google

- All about Core Web Vitals

- PageSpeed Insights documentation

- Search Console documentation

Preparation of the SEO audit

The beginning of an SEO audit is information gathering and initial screening. Before you start, be clear about the site's baseline, focus, and goals, and keep them in mind throughout all steps of the SEO audit. Corporate websites, blogs, online magazines, and online stores have different SEO needs.

During the SEO audit, it is important to look at the site from a visitor's perspective to avoid skewing the results. You should be logged out in WP backend as the output can be different between logged-in and logged-out users. It's best to use a private browser window or incognito mode.

If you don't use an SEO plugin, you should install one now. Such a plugin will help you to exploit the full SEO potential of the site.

Also, collect all SEO-relevant information available for the website, such as visitor data from tracking and web analytics software such as Google Analytics or Matomo.

Google Search Console

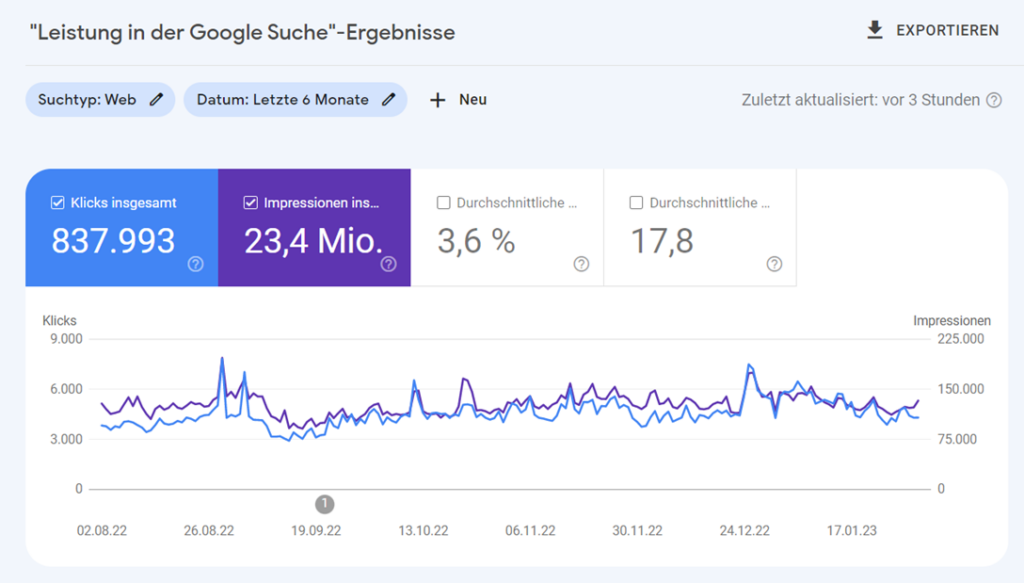

Google Search Console is our first port of call because it provides the best insight into specific website data.

This includes the following information:

- Search engine traffic monitoring: Detailed information on website traffic performance, including the number of impressions, clicks, and CTR

- Keyword Optimization: Identification of relevant search terms

- Technical monitoring: Reports on technical errors that may prevent the site from being properly indexed by search engines

- Mobile Optimization: Insight into the mobile performance of the site and identification of content display issues on smartphones

- Rich results: Monitoring and optimization, including schema markup integration.

The free Google Search Console is an essential tool for optimizing website performance and search engine visibility. Visit Google's Help Center for instructions on setting up Search Console.

Note that it may take some time for Search Console to collect enough meaningful data after setup. Ideally, Search Console will be up and running at the time of the SEO audit. The final data in Search Console will also only be displayed with a delay of about 48 hours. For real-time data, use Matomo or Google Analytics.

PageSpeed Insights

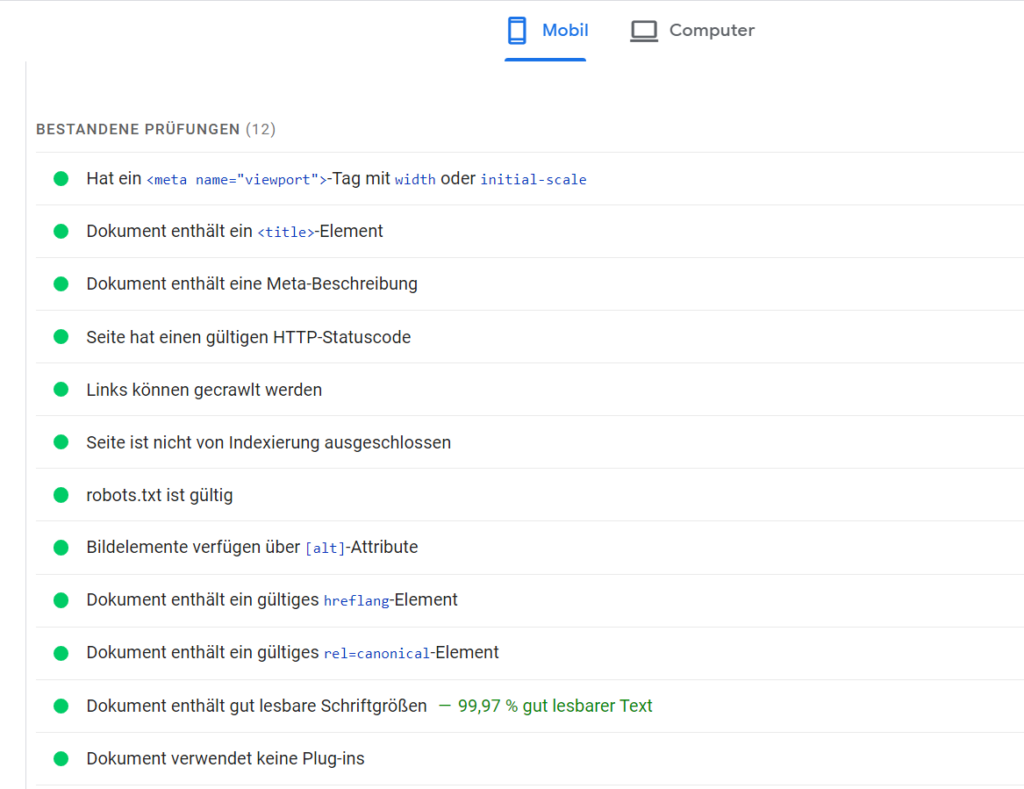

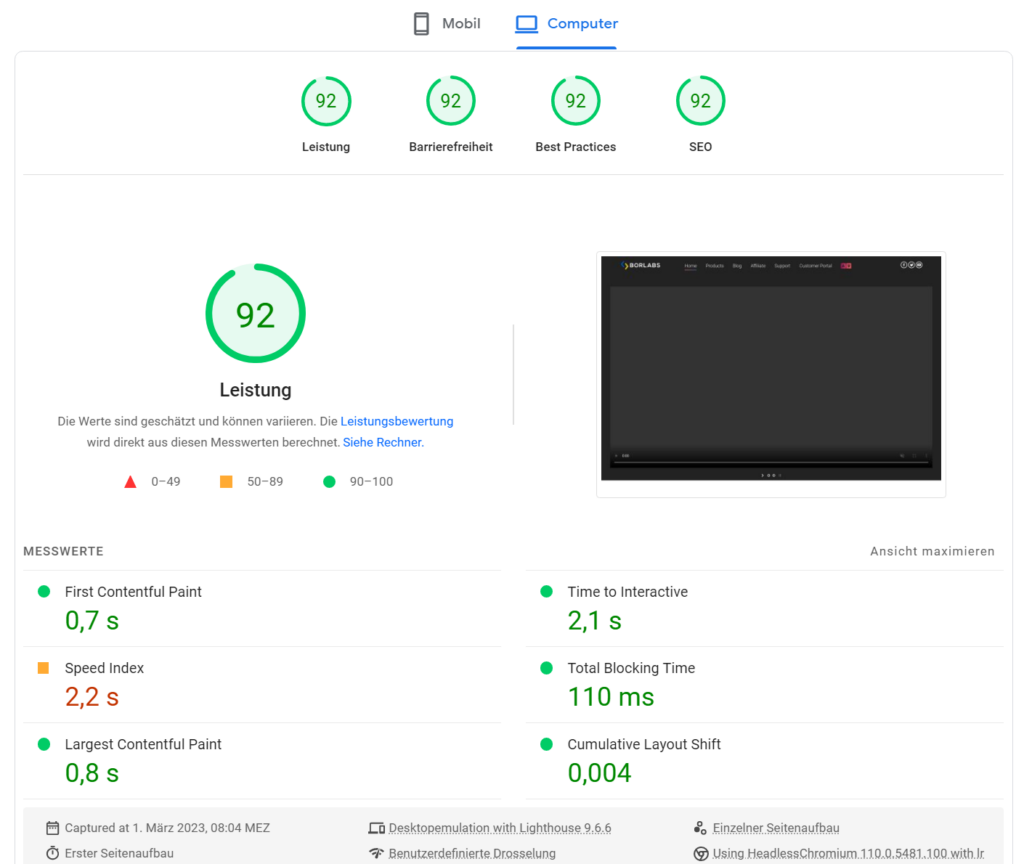

Analyze the site with PageSpeed Insights for performance, accessibility, and SEO tips and recommendations.

SEO Spider

SEO Spider detects many errors and problems while reading the web page and creates a clear report.

Part 1: Editorial SEO Audit

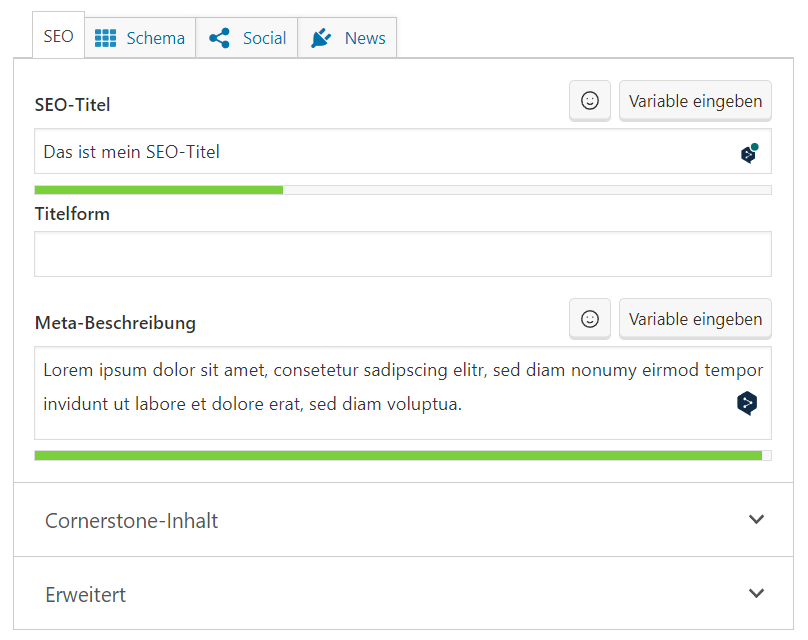

This part of the audit is directly related to the content of a website and should be taken into account when creating and revising pages and posts. When an SEO plugin is used, editors can make most of the settings conveniently in the WordPress editor.

You should check the following elements during an editorial SEO audit.

Page title and meta description

Title and meta descriptions are not directly readable by a web page visitor and are located in the tag of a web page. The title of a page appears as a heading in search results and in the browser tab. The meta description is a short teaser about the content of a page and is also displayed in search results (also called SERPs, Search Engine Result Pages).

Titles and descriptions can and should be created individually for each page and should be informative, meaningful, and appealing. The use of special characters or emojis should be avoided.

Keep the length of the title and description within the recommended limits to avoid being truncated in search results. SEO plugins support this: Yoast, for example, shows you a color bar whether the length fits or not. The free SERP snippet generator from Sistrix is also very helpful.

Action: Check page titles and descriptions for optimal content and length by searching the source code of the pages for the <title> and <meta name="description"> tags, or use a plugin or SEO spider for automatic submission. Make title and description improvements using your SEO plugin.

Headlines

Headings, especially H1 and H2 headings, are especially important for SEO because they indicate the content of your page and help determine how that content ranks in search engines.

Use relevant headings: Headings should describe the content of the page in the case of H1 headings and the content of each subsequent paragraph in the case of H2 headings. The H1 should contain the most important keyword related to the topic of the page and, in the case of search content, should appear in the first third of the headline.

Use the main keyword related to the page's topic at least once in an H2 heading. Use other appropriate keywords in H2 headlines and in the text that follows.

Avoid too many or too few headings (rule of thumb: one H2 heading per 300 words) or unnecessary and inappropriate keywords. Use a clear hierarchy: each page should have only one H1 heading. Use H2 to H6 headings to break up the content of the page.

Actions: Identify duplicate H1 headings in the source code of the pages, or use a plugin or SEO spider for automatic detection. Revise the headings according to the above requirements.

External Links

External links, also known as outbound links, lead from one website to another (external) website. Link only to relevant, high-quality, trustworthy sites. Sensibly placed links to sites with high topical authority show the search engine that your content is sound. Avoid unnecessary links, too many links, or links to questionable sites. This can hurt your ranking.

An external link should be attributed if you don't want search engines to follow the link.

Below are some situations where you should specifically tag an external link:

- No-Follow: Links that lead to sites that you do not want search engines to link to (such as advertising links in sponsored posts) should be set to rel="nofollow". However, this is not a panacea for the potential ranking consequences of regularly linking to questionable sites or content.

- Affiliate-Links: Paid links should be tagged with rel="sponsored" to avoid being considered search engine spam.

- User-Generated Content: Links generated by users in forums, comments or similar places should be tagged with rel="ugc".

Example: <a href="https://example.com" rel="nofollow">Example Link</a>

Actions: Check the attributes of the external links on the pages or use a plugin or SEO spider for automatic listing. If necessary, add "nofollow", "sponsored" or "ugc" labels to external links.

Broken Links

Broken links are outdated or invalid links on a web page that result in a 404 ("page not found") error.

From an SEO perspective, broken links can make it difficult for search engines to index and rank the content of a page, which can have a negative impact on a website's search engine rankings.

Actions: Check the menu structure and content for broken links, or use SEO Spider for automatic detection. Update the links accordingly.

Duplicate Content

Duplicate content is content that exists on multiple web pages or URLs. Examples of duplicate content include standardised landing pages, WordPress functions that generate the same content on multiple URLs, or unauthorised copying of content.

Duplicate content makes it difficult for search engines to determine the most relevant content for a given search query. The visibility of your site in the SERPs can suffer as a result.

Actions: Identify duplicate content on the site or use a plugin or SEO spider for automatic detection. Rewrite the same or very similar content, or ensure that canonical tags correctly point to the original content, as described in the next step.

Canonicals

Canonicals are HTML tags in the section of a web page that indicate which URL is the preferred (canonical) version of a page. They help search engines identify and avoid duplicate content by indicating which URL should be used as the reference for duplicate content.

For example: <link rel="canonical" href="https://example.com/" />

Online shops, blogs, and landing pages need the canonical tag when the same content is available at different URLs.

Actions: Use a plugin or SEO spider for automatic submission. Check the canonical URLs of duplicate content for correct linking. Set correct canonical links via your SEO plugin or use unique content for your pages.

„Hreflang“-Attribute

"Hreflang" attributes in the section of your web page indicate the language and geographical area for which a particular page is optimised.

Use the hreflang attribute on any page that is in a specific language or intended for a specific country to help search engines target content distribution.

Example: <html lang="-en-US">

Actions: Check the source code of the website to see if the correct language and region are stored. This is particularly important for multilingual sites. If necessary, change the page language in the WordPress settings or make adjustments to your translation plugin if the hreflang attributes are not set correctly.

ALT-Attribute

ALT attributes describe visual content, such as images, with text. This supports accessibility, for example when using a screen reader. It also allows search engines to infer the context and meaning of the image, improving visibility and relevance in search results.

In WordPress, you can add ALT descriptions directly from the Media form fields in the Media Library. Enter the description of the image in the Alternate Text field.

Stick to simple, descriptive phrases to improve accessibility and search engine optimisation. Avoid unnecessary information such as "image" or "logo".

Example: <img src="example.jpg" alt="Example Image">

Actions: Check the WordPress library to see if your media has ALT descriptions, or use SEO Spider to automatically list them. Add any missing ALT descriptions.

oEmbed / OpenGraph

oEmbed and OpenGraph make it possible to embed content from a website into other platforms, such as social media channels.

With oEmbed, you can embed images without requiring visitors to open a link to the original source.

The OpenGraph protocol provides information about a page or post, such as author, title, description and thumbnail.

The ability to embed has no direct impact on SEO. However, it does make it easier to distribute your content on the web, which in turn drives users to your site. Their signals (such as page views or time spent) are in turn evaluated by search engines and influence the ranking.

Make sure you assign authors to published pages or posts in the WordPress backend. Often only the username "admin" is stored, and this is what is displayed on some platforms (such as the Discord chat platform).

For search engines, however, a real author name is important because it conveys authenticity and authority, which can be a direct ranking factor.

Actions: Check the performance of different pages on social networks by embedding page URLs in posts on the respective platforms. Make adjustments to the OpenGraph information in the relevant tabs of your SEO plugin (for example, the Social tab in Yoast).

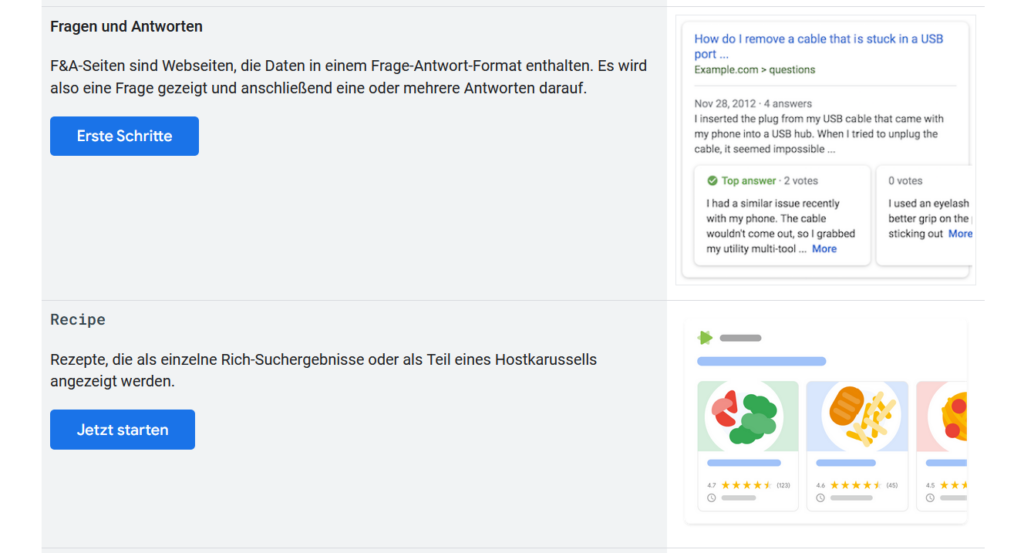

Structured Data: Schema / Rich Results

Structured data provides search engines with specific information about the content of a web page. Structured data can include information about articles, products, organisations, local businesses and more. The technical standard for this is called "Schema Markup".

Rich results are beautifully designed and interactive search results that you can optimise using schema markup and other techniques. These results can include content previews, reviews and pricing. Rich results increase a site's visibility and click-through rate in search engine results.

Actions: If the site has structured data, validate it randomly and correct any errors. If the site has no structured data at all, check which schemas can be used and whether implementation could improve visibility. Some SEO plugins already include functions for using structured data.

Part 2: Audit for technical SEO

The second part of the audit concerns the general technical aspects of a website. Usually, only administrators will make any necessary changes.

SSL/TLS

The second part of the audit concerns the general technical aspects of a website. SSL (Secure Sockets Layer), now known as TLS (Transport Layer Security), is an encryption protocol for transferring data over the Internet. TLS is the successor to SSL. It ensures that sensitive information, such as contact forms or visitor passwords, reaches its destination securely.

A working SSL/TLS certificate, identified by "https://" in the URL, tells search engines that a website is secure and trustworthy. For Google, for example, this is a ranking factor.

The implementation of SSL/TLS for a domain is usually done through the backend of the hosting provider.

Actions: Verify if and how the site uses SSL/TLS by analysing the site with the SSL Labs tool. If necessary, deploy a missing SSL/TLS certificate or make changes to an existing implementation if the SSL Labs assessment is negative.

HTTP(S) / WWW

If there is a valid SSL certificate for a domain, the domain will be accessible at https:// in encrypted form. However, depending on the hosting configuration, a domain may still be accessible unencrypted at "http://" without an HTTP request being automatically redirected to HTTPS. In this case, there are essentially two versions of the website, one encrypted (HTTPS) and one unencrypted (HTTP).

However, a website and its content should only be accessible via HTTPS. If HTTP requests are automatically redirected to HTTPS, the hosting is configured correctly.

In addition to HTTP(S), it is also possible to start the domain with "www". Again, a website can exist in two independent versions: with "www." and without. The "www." extension is now considered obsolete and should no longer be used for new projects.

Instead, the domain should primarily resolve to "https://". Automatic redirects from "https://www." to "https://" are still allowed.

From what has been described above, there are four possible cases:

- http://example.com

Unencrypted and without www: Not allowed. The server must forward the request to https://example.com. - https://example.com

Encrypted and without www: Best practice. All other cases should be redirected here. - http://www.example.com

Unencrypted and with "www: Deprecated and not allowed. The server should at least forward the request to https://www.example.com, ideally to https://example.com. - https://www.example.com

Encrypted and with "www: Deprecated but allowed. The server should redirect the request to https://example.com.

Actions: Check what happens when you access the webpage using the different combinations in the browser. To do this, double-click on the address bar in Chrome to see the prefix (e.g. "https://www.") of a web page.

Make sure that there is only one version of the web page accessible and that redirects take place otherwise. If HTTP and WWW requests are automatically redirected to "https://", everything is configured correctly.

If the site can be accessed independently at http:// or both with and without "www.", check the hosting settings and make the appropriate changes.

Security Header

Security headers guarantee the security and integrity of a web page. Search engines prefer secure websites. What security headers there are and how to use them under WordPress, we have described in detail in the linked article.

Actions Check if security headers are used by analysing the website with the "Webbkoll" tool. Implement any missing security headers.

Indexing

Indexing is the process by which search engines 'scan' web pages and their content. This process is carried out by web crawlers. When a page is indexed by a search engine, it means that the search engine has found the page and stored it and its content in its database. Only then can a web page be displayed in search results.

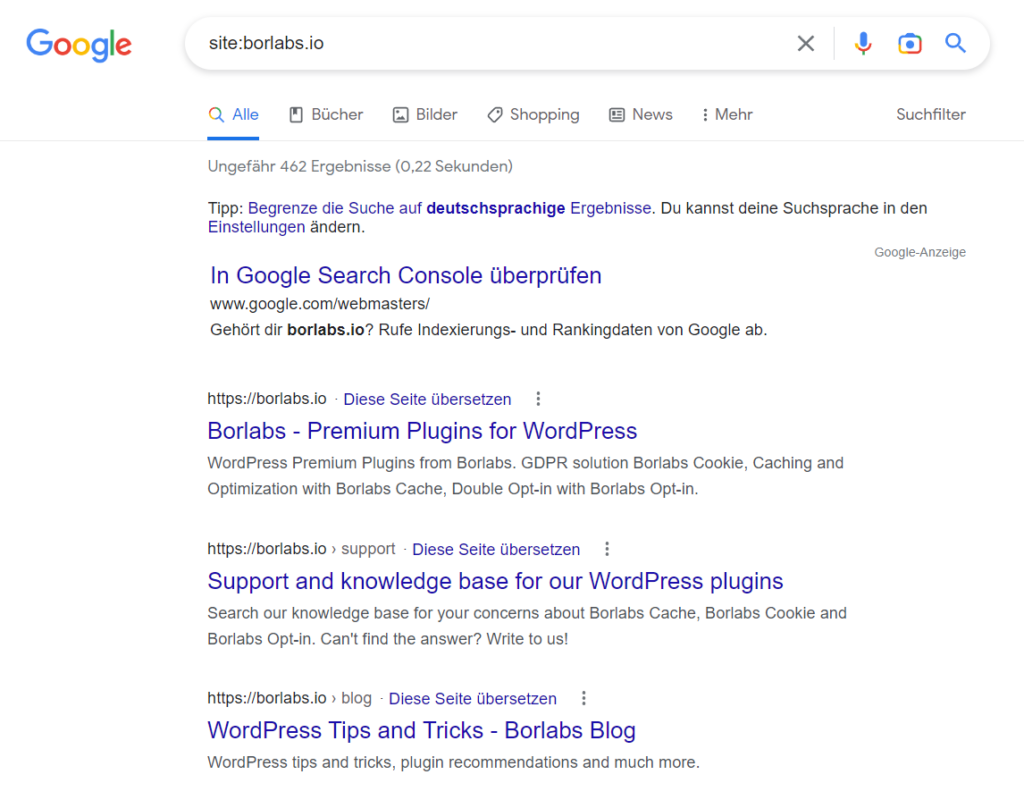

If you want to find out which pages are already in Google's search results, you can use the "site:" parameter to limit Google's search to a specific domain:

- "site:example.com" searches for all pages on the domain "example.com".

- "site:example.com Keyword" searches for all pages on the domain "example.com" that contain the term "keyword".

Site owners can tell search engines in several ways whether or not to index the entire site or specific pages:

- Robots-Meta-Tag: An HTML tag with instructions whether and how a certain page should be indexed

- Sitemap: One or more lists of URLs that search engines should include in their index.

- robots.txt: A text file with instructions that controls whether and how web crawlers are allowed to visit the web page.

In some cases, it may be useful to exclude certain pages from indexing, for example, to prevent the imprint or privacy policy from appearing in search results. WordPress only offers the option to allow or deny indexing of the entire website. You can find the checkbox under "Settings" - "Read" - "Prevent search engines from indexing this website".

This will change the Robots meta tag globally in the source code of each page with the statement "index" or "noindex". SEO plugins, on the other hand, allow you to control indexing on a page-by-page basis. They set the "index" or "noindex" instruction in the robots meta tag of each page and automatically include or remove it from the sitemap.

It is up to the search engines to follow the instructions or not - you cannot definitively "ban" a page from being indexed.

Actions: Check that the site is allowed to be indexed by randomly examining the robots meta tag of different pages in the source code, or by analysing page URLs using PageSpeed Insights. You can use a plugin or SEO spider to automatically display a list of all pages and their indexability settings. If necessary, exclude certain pages from indexing.

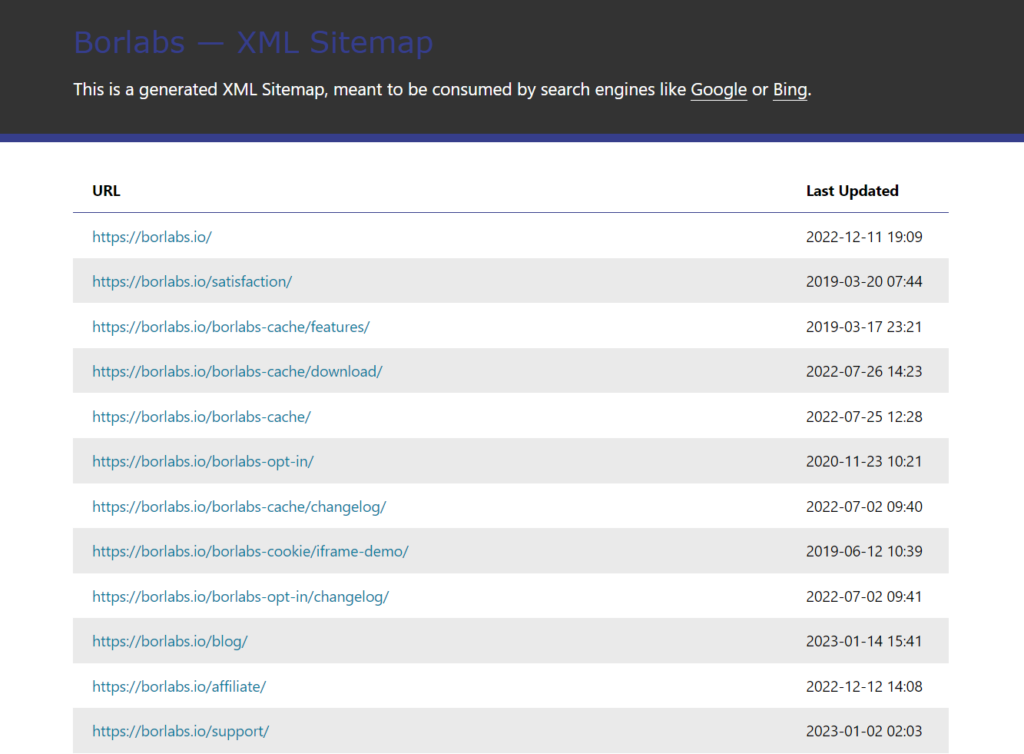

Sitemap

A sitemap is an XML file that provides an overview of a website's structure and the content to be indexed by search engines. For large websites, multiple sitemaps can be used, broken down by pages, articles, categories, archives, authors, etc.

SEO plugins automatically generate and maintain XML Sitemaps. Sitemap URLs may vary depending on the SEO plugin used:

- https://example.com/sitemap.xml

- https://example.com/sitemap_index.xml

- https://example.com/wp-sitemap.xml

Actions: Check the accessibility and content of the sitemap(s) by viewing the XML file(s) in your browser. If necessary, make changes to the sitemap using an SEO plugin by disabling unwanted sitemaps, such as an author sitemap, or by creating a sitemap for your own post types.

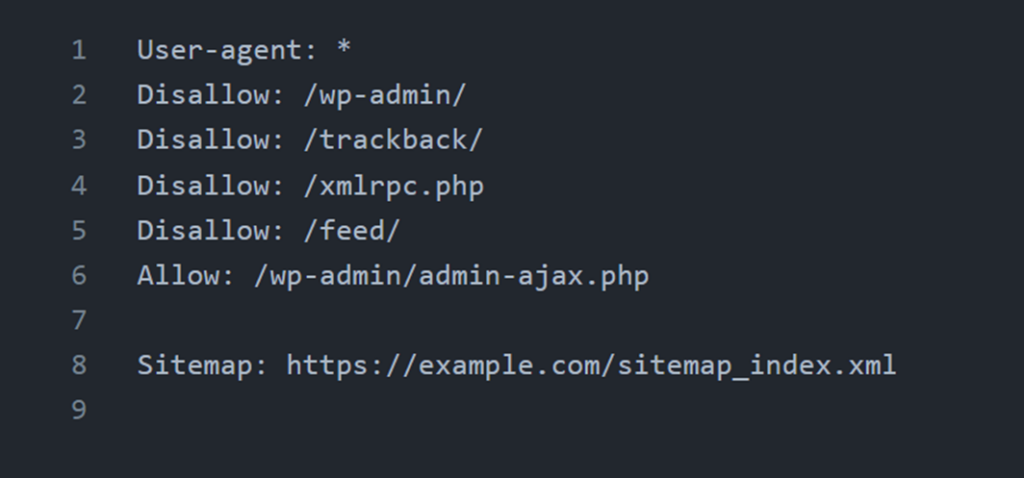

Robots.txt

Similar to the Sitemap, robots.txt controls which pages are indexed by search engines. It also contains the URL of the Sitemap. The file should be located in the root directory (https://example.com/robots.txt) of the website and is usually automatically generated by an SEO plugin.

The robots.txt file is an important standard, but not mandatory. Search engines should follow the instructions in the robots.txt file. It is important for SEO that the robots.txt does not exclude files or directories that are publicly accessible.

Actions: Check the accessibility and the robots.txt entries by accessing the following file: https://example.com/robots.txt. Make sure that the URL to the (root) sitemap is valid. If necessary, make changes to the text file.

Redirects

A redirect is when a web page redirects to a different URL when accessed. This is useful for both users and search engines, for example if you change or delete the URL of a post. Some SEO plugins automatically generate redirects in these cases.

Redirects sollten korrekt funktionieren und den richtigen Typ verwenden:

- 301 for permanent redirects

- 302 for temporary redirects

Redirect errors, such as a redirect loop, can have a negative impact on search engine optimisation.

An advantage of redirects is that the link authority of the original page is passed on to the target page. This means, for example, that the ranking can be maintained.

Actions: Ensure that you create redirects manually if necessary, or that they are created automatically by a plugin. Check your site manually for incorrect redirects, or use SEO spiders for automatic detection to specifically identify errors. Check the redirect list (often found in the .htaccess file) for plausibility and anomalies, and make changes if necessary.

Theme

When visually designing a website, you need to consider factors that can affect usability, readability and SEO.

- Text size: The text on the website should be large enough to be easily read on all devices.

- Spacing between elements: The spacing between lines of text, headings, links, images and other elements should not be too tight to create a clear hierarchy and overview on the web page. This prevents content from overlapping or links from being too close together to be clickable with touch input.

- Contrast ratio: The contrast between text colour and background colour should be high enough to ensure good readability on all devices.

- Responsive design: The website should be optimised for different devices (such as desktop PCs, laptops, tablets and smartphones).

Actions: Check your website for usability and responsiveness by analysing random page URLs using PageSpeed Insights. Also perform a manual visual check on various devices. Implement recommendations from the PageSpeed Insights analysis as appropriate.

Performance

Website performance is a very important ranking factor for search engines. A fast loading time contributes to a better user experience and reduces the likelihood of visitors leaving the site prematurely. Search engines receive signals from visitors, such as dwell time or load time, and use them to evaluate the performance of a website.

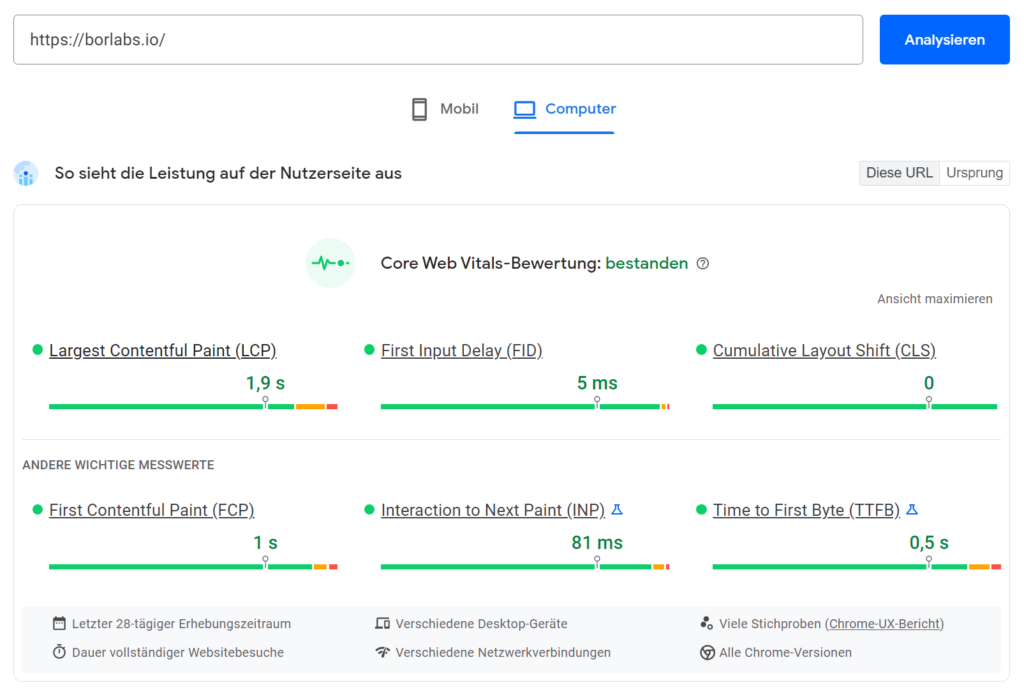

Google's Core Web Vitals are three key indicators of a website's user experience. These metrics measure a page's load time, stability and interactivity. The Core Web Vitals include

- Largest Contentful Paint (LCP): The loading time for the largest visible content on the page.

- First Input Delay (FID): The time to process an interaction from users (such as click or touch).

- Cumulative Layout Shift (CLS): Movement of visible content during load time (such as banner ads).

If there is not enough field data available due to a low number of hits on a website, the only option is the 'lab measurement', which PageSpeed Insights lists below the Core Web Vitals.

The calculated score is not particularly meaningful here, but the individual measurements listed are a good indication of whether a website would meet the Core Web Vitals.

PageSpeed Insights also displays clues as to which parts are negatively impacting performance. Review the metrics, hints, and recommendations and assess whether website performance optimization is needed.

There are several ways to improve the performance of a WordPress website. Here are some examples:

- Use of fast and powerful hosting

- Install and use a caching plugin

- Optimise images and media

- Disable unnecessary plugins

- Use of a fast and lightweight WordPress theme

- Use of a Content Delivery Network (CDN)

Actions: Check website performance by randomly analysing page URLs via PageSpeed Insights or by accessing the Core Web Vitals tab in Search Console. If enough user signals (field data) are available, PageSpeed Insights will indicate whether the Core Web Vitals are met. If this is the case for desktop and mobile, you don't need to take any further action.